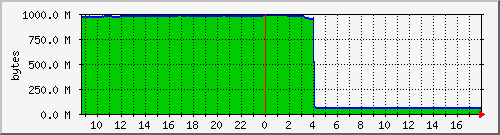

At around 4:00am, the GoogleBot latched on to my server and started pounding a couple of PHP scripts that are very CPU intensive (one of them resizes pictures, another is very database intensive). For some reason that I'm still investigating, my web server (apache) started gobbling up memory to try and keep up with the requests from the GoogleBot, eventually causing the machine to become completely unresponsive. See below for a graph of free system memory -- see if you can find the point where the GoogleBot found the server :-)

Now, Google follows proper web crawler etiquette and was only requesting a page every few seconds, but due to the resource intensive nature of the pages being requested, that persistent yet relentless load was enough to bring my server to its knees. I'm to blame for not having a properly coded robots.txt file, and also for not employing sufficient caching of the CPU-heavy scripts.

But I think Google has some culpability too, as they should be able to detect when they are crushing a server (based on a sudden rash of "404 not found" errors or other metrics) and they should back off for a while. They should also periodically check robots.txt for changes once they've started crawling a site (I quickly added a "Disallow:" line to protect my scripts, but the GoogleBot didn't seem to check it and kept on going.) The total GoogleBot access count according to my weblogs is currently 22,207 and climbing (they are still hitting my server every few seconds).

At first I suspected a hardware error since my server would crash at random intervals after being rebooted. It turns out that this random time was just the amount of time it took GoogleBot to re-latch on to my server after knocking it out of commission.

In debugging this, I swapped out every piece of hardware possible on the system before looking at the software installation as the possible culprit. Wondering if somebody had found a security exploit, I made sure that all of my Gentoo packages were up to date, and also recompiled the Linux kernel to the latest version. Recompiling the kernel turned out to be the pivotal action, as one of the new features was some sort of fail safe that starts killing off processes if the system runs out of memory. This enabled my system to stay alive long enough for me to see the server logs, which revealed a gazillion requests coming in from 66.249.65.239 (which, a reverse DNS lookup revealed as a googlebot server).

For now, I have simply blocked that IP address from accessing any pages on my server. Once things have settled down, I will re-allow access (don't want to be left off of the Google search index!). By then, my new robots.txt file should be in effect, and hopefully I won't have any more problems.

No hard feelings toward Google, of course. It's really my fault that this happened... amazing that it took this long for this sort of thing to occur!